Understanding kubernetes networking: owerlay networks

In my previous posts We check how the pod networking and How Kubernetes use services for loadbalancing. Now we check how the diferente Networking solutions works. How Theas solutins link the kubernetes nodes together.

Kubernetes was built to run distributed systems over a cluster of machines. This meanns networking is a central point of all Kubernetes clusters. Kubernetes dose not hawe an includid network solution, you haw to chouse one. Withouth this solutions all host whou be a closed separated ecosistem. Many kubernetes deployment guides provide instructions for deploying a kubernetes networking (CNI) to your cluster. The most polular solutions are Calico, Flannel, Weave, and Cilium. Most time you simpli deploy a yaml or a helm chart, but we didn’t undestand how theas solutions works. Understanding the Kubernetes networking model will allow you to easely troubleshoot your applications running on Kubernetes.

What is a CNI?

The CNI (Container Network Interface) project describes the specifications to provide a generic plugin-based networking solution for linux containers. A CNI plugin is an executable and it’s config that follows the CNI spec and we’ll discuss some plugins in the post below.

Overlay network

The concept of overlay networking has been around for a while, but has made a return to the spotlight with the rise of Docker and Kubernetes. It is basicly a virtual network of nodes and logical links, which are built on top of an existing network. To solve this chelange the CNI plugins use two main aprouch VXLAN (Virtual Extensible LAN) and simple layer3 routing. The aim of an overlay network is to enable a new service or function without having to reconfigure the entire network design.

Node IPAM Controller

All pods are required to have an IP address. it’s important to ensure that all pods across the entire cluster have a unique IP address. kube-controller-manager allocates each node a dedicated subnet (podCIDR) from the cluster CIDR (IP range for the cluster network) For example if the overlay network is 10.244.0.0/16 all the kubernetes hosts gets a /24 segment so the master01 is 10.244.0.0/24 the master02 is 10.244.1.0/24 and so on. podCIDR for a node can be listed using the following command:

cidr

$ kubectl get no <nodeName> -o json | jq '.spec.podCIDR'

10.244.0.0/24

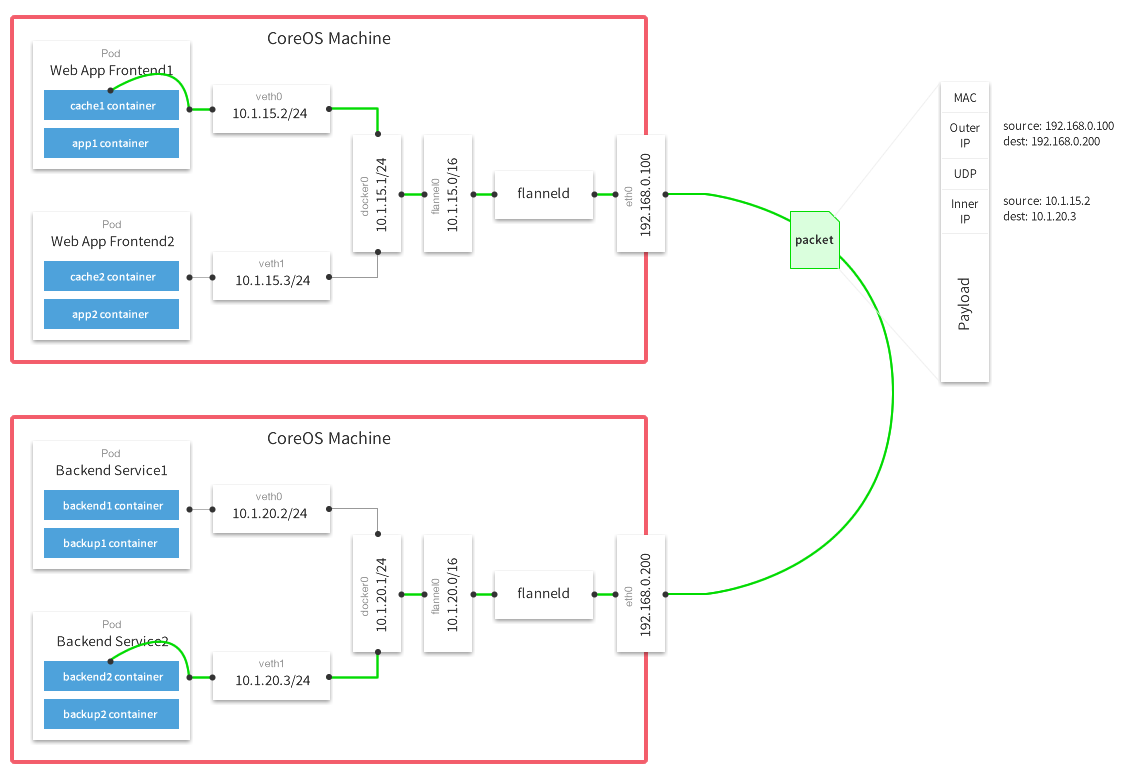

Flannel

Flannel runs a small, single binary agent called flanneld on each host, and is responsible for allocating a subnet lease to each host out of a larger, preconfigured address space. Flannel uses either the Kubernetes API or etcd directly to store the network configuration, the allocated subnets, and any auxiliary data (such as the host’s public IP). Packets are forwarded using one of several backend mechanisms including VXLAN and various cloud integrations. (Refer to Flannel)

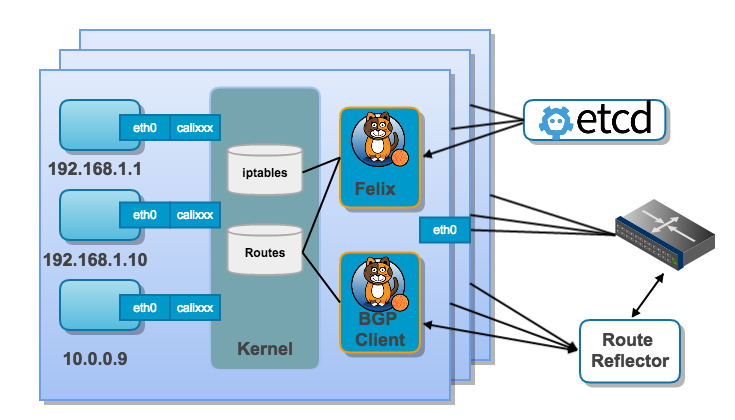

Calico

Calico originally used a pure 3-layer protocol to support multi-host network communication. On each host a vRouter propagates workload reachability information (routes) to the rest of the data center using BGP protocol. The pure Layer 3 approach avoids the packet encapsulation associated with the Layer 2 solution which simplifies diagnostics, reduces transport overhead and improves performance. Calico also implements VxLAN and the newer version use VxLAN as it’s default soluton.

Weave Net

Connectivity is set up by the weave-net binary by attaching pods to the weave Linux bridge. The bridge is, in turn, attached to the Open vSwitch’s kernel datapath which forwards the packets over the vxlan interface towards the target node.

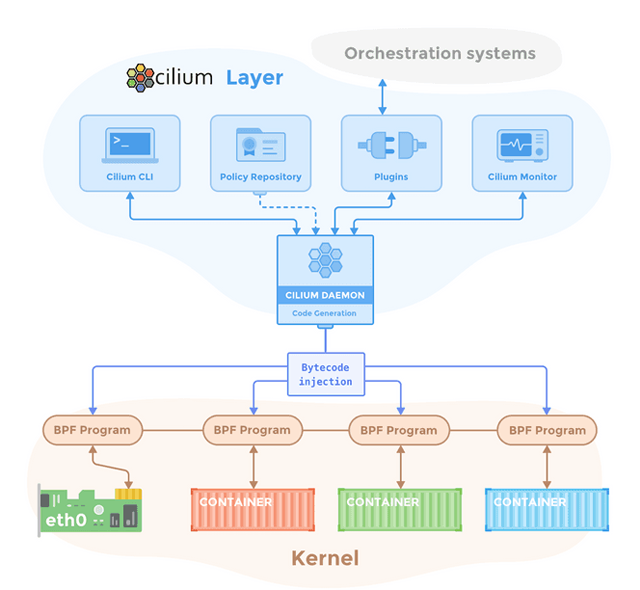

Cilium

Cilium is one of the most advanced and powerful Kubernetes networking solutions. At its core, it utilizes the power of eBPF to perform a wide range of functionality ranging from traffic filtering for NetworkPolicies all the way to CNI and kube-proxy replacement. BPF is basically the ability of an application developer to write a program, load that into the Linux kernel, then run it when certain events happen.

| Features/Capabilities | Calico | Flannel | Weave | Cilium |

|---|---|---|---|---|

| Network Model | BGP or VxLAN | VxLAN or UDP Channel | VxLAN or UDP Channel | VXLAN or Geneve |

| Encryption Channel | ✓ | ✓ | ✓ | ✓ |

| NetworkPolicies | ✓ | X | ✓ | ✓ |

| kube-proxy replacement | ✓ | X | X | ✓ |

| Egress Routing | X | X | X | ✓ |

Cluster mesh

Cluster mesh enables direct networking between Pods and Services in different Kubernetes clusters, either on-premises or in the cloud.

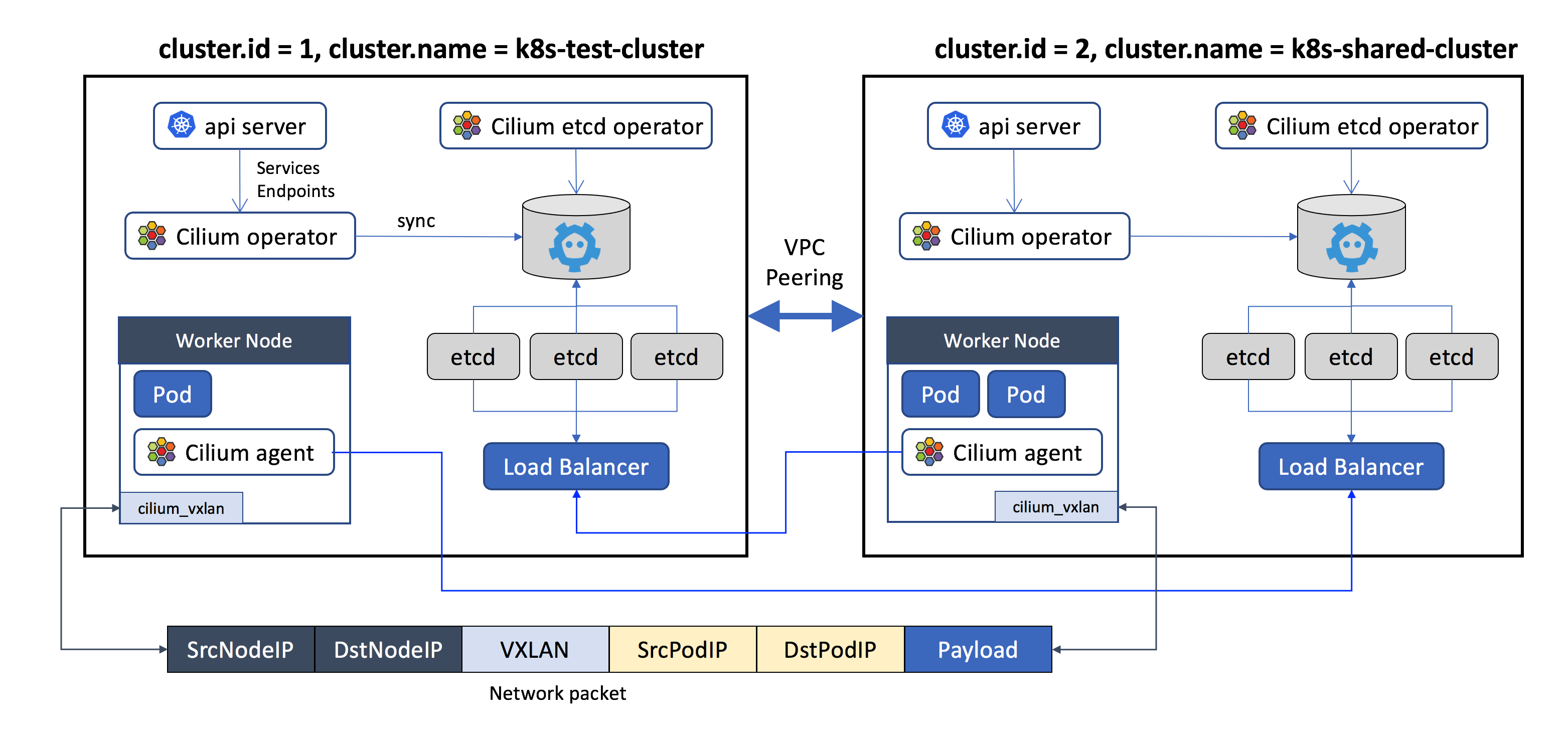

Cilium ClusterMesh

As Kubernetes gains adoption, teams are finding they must deploy and manage multiple clusters to facilitate features like geo-redundancy, scale, and fault isolation for their applications. Cilium’s multi-cluster implementation provides the following features:

- Inter-cluster pod-to-pod connectivity without gateways or proxies.

- Transparent service discovery across clusters using standard Kubernetes services and CoreDNS.

- Network policy enforcement across clusters.

- Encryption in transit between nodes within a cluster as well as across cluster boundaries.