How to deploy CRI-O with gVisor?

In this post I will show you how you can install and use gvisor engine in kubernetes.

Parts of the K8S Security Lab series

Container Runetime Security

- Part1: How to deploy CRI-O with Firecracker?

- Part2: How to deploy CRI-O with gVisor?

- Part3: How to deploy containerd with Firecracker?

- Part4: How to deploy containerd with gVisor?

- Part5: How to deploy containerd with kata containers?

Advanced Kernel Security

- Part1: Hardening Kubernetes with seccomp

- Part2: Linux user namespace management wit CRI-O in Kubernetes

- Part3: Hardening Kubernetes with seccomp

Network Security

- Part1: RKE2 Install With Calico

- Part2: RKE2 Install With Cilium

- Part3: CNI-Genie: network separation with multiple CNI

- Part3: Configurre network wit nmstate operator

- Part3: Kubernetes Network Policy

- Part4: Kubernetes with external Ingress Controller with vxlan

- Part4: Kubernetes with external Ingress Controller with bgp

- Part4: Central authentication with oauth2-proxy

- Part5: Secure your applications with Pomerium Ingress Controller

- Part6: CrowdSec Intrusion Detection System (IDS) for Kubernetes

- Part7: Kubernetes audit logs and Falco

Secure Kubernetes Install

- Part1: Best Practices to keeping Kubernetes Clusters Secure

- Part2: Kubernetes Secure Install

- Part3: Kubernetes Hardening Guide with CIS 1.6 Benchmark

- Part4: Kubernetes Certificate Rotation

User Security

- Part1: How to create kubeconfig?

- Part2: How to create Users in Kubernetes the right way?

- Part3: Kubernetes Single Sign-on with Pinniped OpenID Connect

- Part4: Kubectl authentication with Kuberos Depricated !!

- Part5: Kubernetes authentication with Keycloak and gangway Depricated !!

- Part6: kube-openid-connect 1.0 Depricated !!

Image Security

Pod Security

- Part1: Using Admission Controllers

- Part2: RKE2 Pod Security Policy

- Part3: Kubernetes Pod Security Admission

- Part4: Kubernetes: How to migrate Pod Security Policy to Pod Security Admission?

- Part5: Pod Security Standards using Kyverno

- Part6: Kubernetes Cluster Policy with Kyverno

Secret Security

- Part1: Kubernetes and Vault integration

- Part2: Kubernetes External Vault integration

- Part3: ArgoCD and kubeseal to encript secrets

- Part4: Flux2 and kubeseal to encrypt secrets

- Part5: Flux2 and Mozilla SOPS to encrypt secrets

Monitoring and Observability

- Part6: K8S Logging And Monitoring

- Part7: Install Grafana Loki with Helm3

Backup

What is gvisor

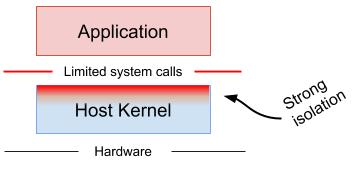

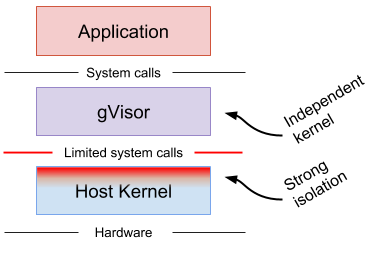

gVisor is an application kernel, written in Go, that implements a substantial portion of the Linux system call interface. It provides an additional layer of isolation between running applications and the host operating system.

gVisor includes an Open Container Initiative (OCI) runtime called runsc that makes it easy to work with existing container tooling. The runsc runtime integrates with Docker, CRI-O and Kubernetes, making it simple to run sandboxed containers.

Install gvisor

sudo dnf install epel-release nano wget -y

nano gvisor.sh

#!/bash

(

set -e

ARCH=$(uname -m)

URL=https://storage.googleapis.com/gvisor/releases/release/latest/${ARCH}

wget ${URL}/runsc ${URL}/runsc.sha512 \

${URL}/containerd-shim-runsc-v1 ${URL}/containerd-shim-runsc-v1.sha512

sha512sum -c runsc.sha512 \

-c containerd-shim-runsc-v1.sha512

rm -f *.sha512

chmod a+rx runsc containerd-shim-runsc-v1

sudo mv runsc containerd-shim-runsc-v1 /usr/local/bin

)

bash gvisor.sh

...

runsc: OK

containerd-shim-runsc-v1: OK

Install and configure CRI-O

export VERSION=1.21

sudo curl -L -o /etc/yum.repos.d/devel_kubic_libcontainers_stable.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable/CentOS_8/devel:kubic:libcontainers:stable.repo

sudo curl -L -o /etc/yum.repos.d/devel_kubic_libcontainers_stable_cri-o_${VERSION}.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:${VERSION}/CentOS_8/devel:kubic:libcontainers:stable:cri-o:${VERSION}.repo

yum install cri-o

runsc implements cgroups using cgroupfs so I will use cgroupfs in CRI-O and Kubernets config.

nano /etc/crio/crio.conf

[crio.runtime]

conmon_cgroup = "pod"

cgroup_manager = "cgroupfs"

selinux = false

nano /etc/containers/registries.conf

registries = [

"quay.io",

"docker.io"

]

unqualified-search-registries = [

"quay.io",

"docker.io"

]

Now I need to configure CRI-O to use runsc as low-level runetime egine.

mkdir /etc/crio/crio.conf.d/

cat <<EOF > /etc/crio/crio.conf.d/99-gvisor

# Path to the gVisor runtime binary that uses runsc

[crio.runtime.runtimes.runsc]

runtime_path = "/usr/local/bin/runsc"

EOF

systemctl enable crio

systemctl restart crio

systemctl status crio

Install tools

yum install git -y

sudo git clone https://github.com/ahmetb/kubectx /opt/kubectx

sudo ln -s /opt/kubectx/kubectx /usr/local/sbin/kubectx

sudo ln -s /opt/kubectx/kubens /usr/local/sbin/kubens

Install Kubernetes

Configure Kernel parameters for Kubernetes.

cat <<EOF | sudo tee /etc/modules-load.d/CRI-O.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system

Disable swap for Kubernetes.

free -h

swapoff -a

swapoff -a

sed -i.bak -r 's/(.+ swap .+)/#\1/' /etc/fstab

free -h

The I will add the kubernetes repo and Install the packages.

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

CRIP_VERSION=$(crio --version | awk '{print $3}')

yum install kubelet-$CRIP_VERSION kubeadm-$CRIP_VERSION kubectl-$CRIP_VERSION -y

Start Kubernetes with CRI-O engine.

export IP=172.17.13.10

dnf install -y iproute-tc

systemctl enable kubelet.service

# for multi interface configuration

echo 'KUBELET_EXTRA_ARGS="--node-ip='$IP' --cgroup-driver=cgroupfs"' > /etc/sysconfig/kubelet

kubeadm config images pull --cri-socket=unix:///var/run/crio/crio.sock --kubernetes-version=$CRIP_VERSION

kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=$IP --kubernetes-version=$CRIP_VERSION --cri-socket=unix:///var/run/crio/crio.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get no

crictl ps

kubectl taint nodes $(hostname) node-role.kubernetes.io/master:NoSchedule-

Inincialize network

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl aplly -f kube-flannel.yml

OR

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

nano custom-resources.yaml

...

cidr: 10.244.0.0/16

...

kubectl apply -f custom-resources.yaml

Start Deployment

First I create a RuntimeClass for gvisor then start a pod with this RuntimeClass.

cat<<EOF | kubectl apply -f -

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: gvisor

handler: runsc

EOF

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

labels:

app: untrusted

name: www-gvisor2

spec:

runtimeClassName: gvisor

containers:

- image: nginx:1.18

name: www

ports:

- containerPort: 80

EOF

$ kubectl get po

NAME READY STATUS RESTARTS AGE

www-gvisor 1/1 Running 0 2m47s

$ kubectl describe po www-gvisor

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m42s default-scheduler Successfully assigned default/www-kata to alma8

Normal Pulled 2m13s kubelet Container image "nginx:1.18" already present on machine

Normal Created 2m13s kubelet Created container www

Normal Started 2m11s kubelet Started container www