How to deploy containerd with Firecracker?

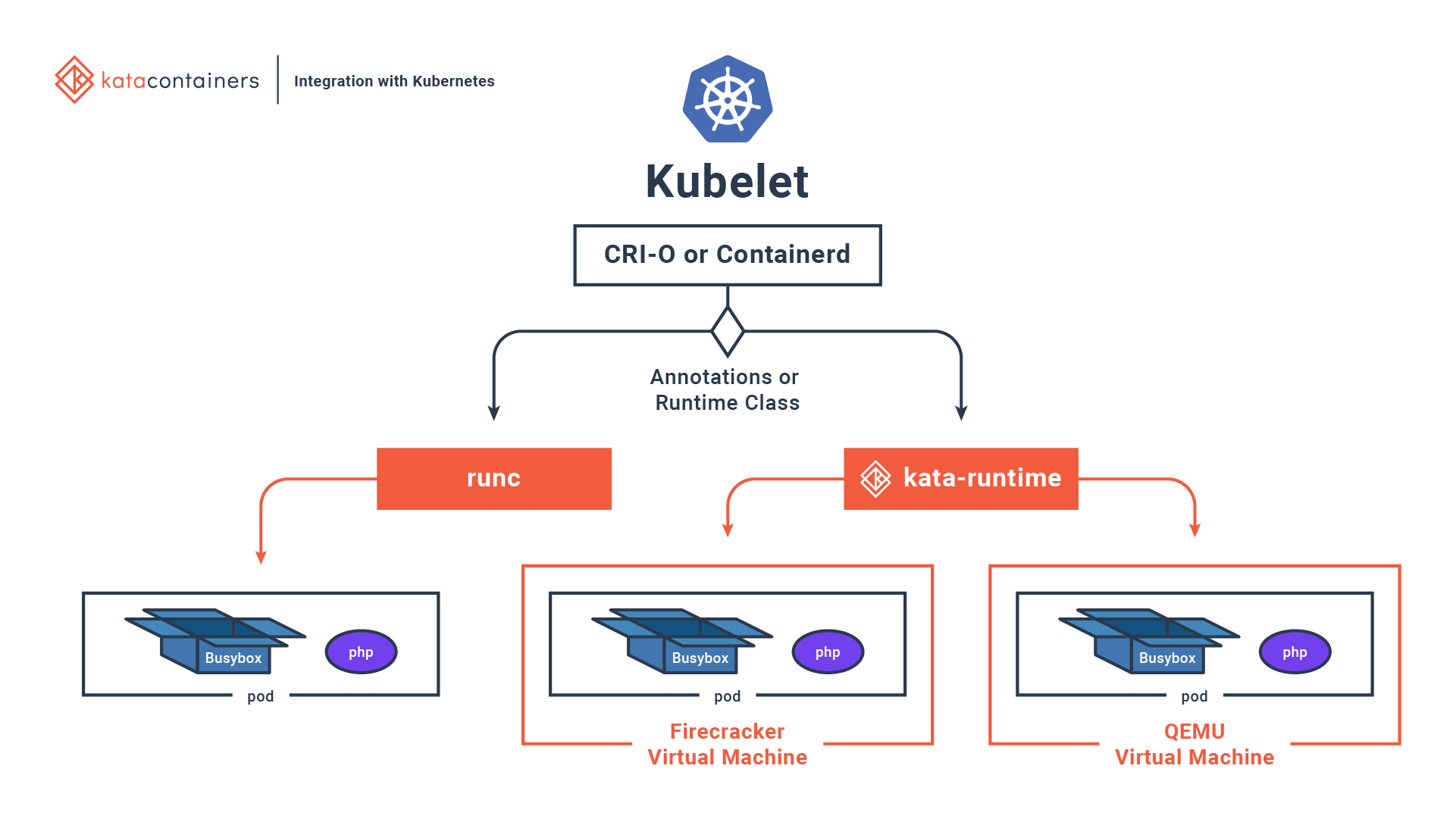

In this post I will show you how you can install and use kata-container with Firecracker engine in kubernetes.

Parts of the K8S Security Lab series

Container Runetime Security

- Part1: How to deploy CRI-O with Firecracker?

- Part2: How to deploy CRI-O with gVisor?

- Part3: How to deploy containerd with Firecracker?

- Part4: How to deploy containerd with gVisor?

- Part5: How to deploy containerd with kata containers?

Advanced Kernel Security

- Part1: Hardening Kubernetes with seccomp

- Part2: Linux user namespace management wit CRI-O in Kubernetes

- Part3: Hardening Kubernetes with seccomp

Network Security

- Part1: RKE2 Install With Calico

- Part2: RKE2 Install With Cilium

- Part3: CNI-Genie: network separation with multiple CNI

- Part3: Configurre network wit nmstate operator

- Part3: Kubernetes Network Policy

- Part4: Kubernetes with external Ingress Controller with vxlan

- Part4: Kubernetes with external Ingress Controller with bgp

- Part4: Central authentication with oauth2-proxy

- Part5: Secure your applications with Pomerium Ingress Controller

- Part6: CrowdSec Intrusion Detection System (IDS) for Kubernetes

- Part7: Kubernetes audit logs and Falco

Secure Kubernetes Install

- Part1: Best Practices to keeping Kubernetes Clusters Secure

- Part2: Kubernetes Secure Install

- Part3: Kubernetes Hardening Guide with CIS 1.6 Benchmark

- Part4: Kubernetes Certificate Rotation

User Security

- Part1: How to create kubeconfig?

- Part2: How to create Users in Kubernetes the right way?

- Part3: Kubernetes Single Sign-on with Pinniped OpenID Connect

- Part4: Kubectl authentication with Kuberos Depricated !!

- Part5: Kubernetes authentication with Keycloak and gangway Depricated !!

- Part6: kube-openid-connect 1.0 Depricated !!

Image Security

Pod Security

- Part1: Using Admission Controllers

- Part2: RKE2 Pod Security Policy

- Part3: Kubernetes Pod Security Admission

- Part4: Kubernetes: How to migrate Pod Security Policy to Pod Security Admission?

- Part5: Pod Security Standards using Kyverno

- Part6: Kubernetes Cluster Policy with Kyverno

Secret Security

- Part1: Kubernetes and Vault integration

- Part2: Kubernetes External Vault integration

- Part3: ArgoCD and kubeseal to encript secrets

- Part4: Flux2 and kubeseal to encrypt secrets

- Part5: Flux2 and Mozilla SOPS to encrypt secrets

Monitoring and Observability

- Part6: K8S Logging And Monitoring

- Part7: Install Grafana Loki with Helm3

Backup

What is Kata container engine

Kata Containers is an open source community working to build a secure container runtime with lightweight virtual machines that feel and perform like containers, but provide stronger workload isolation using hardware virtualization technology as a second layer of defense. (Source: Kata Containers Website )

Why should you use Firecracker?

Firecracker is a way to run virtual machines, but its primary goal is to be used as a container runtime interface, making it use very few resources by design.

Enable qvemu

I will use Vagrant and VirtualBox for running the AlmaLinux VM so first I need to enable then Nested virtualization on the VM:

VBoxManage modifyvm alma8 --nested-hw-virt on

After the Linux is booted test the virtualization flag in the VM:

egrep --color -i "svm|vmx" /proc/cpuinfo

If you find one of this flags everything is ok. Now we need to enable the kvm kernel module.

sudo modprobe kvm-intel

sudo modprobe vhost_vsock

Install and configure containerd

To work with firecracker the containerd must use devmapper for snapsoter plugin.

sudo dnf install epel-release nano wget -y

sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo dnf install -y containerd.io lvm2

First I installed the containerd. Devmapper is the only storage driver supported by Firecracker so now I create the thin-pool lvm for devmapper snapsoter:

sudo pvcreate /dev/sdb

sudo vgcreate containerd /dev/sdb

sudo lvcreate --wipesignatures y -n data containerd -l 95%VG

sudo lvcreate --wipesignatures y -n meta containerd -l 1%VG

sudo lvconvert -y \

--zero n \

-c 512K \

--thinpool containerd/data \

--poolmetadata containerd/meta

nano /etc/lvm/profile/ontainerd-thinpool.profile

activation {

thin_pool_autoextend_threshold=80

thin_pool_autoextend_percent=20

}

sudo lvchange --metadataprofile ontainerd-thinpool containerd/data

sudo lvchange --monitor y containerd/data

And dmsetup will produce the following output:

containerd-data (253:2)

containerd-data_tdata (253:1)

containerd-data_tmeta (253:0)

sudo mkdir -p /etc/containerd

sudo containerd config default > /etc/containerd/config.toml

nano /etc/containerd/config.toml

[plugins]

...

[plugins."io.containerd.grpc.v1.cri".containerd]

snapshotter = "devmapper"

...

[plugins."io.containerd.snapshotter.v1.devmapper"]

pool_name = "containerd-data"

base_image_size = "8GB"

async_remove = false

echo "runtime-endpoint: unix:///run/containerd/containerd.sock" > /etc/crictl.yaml

# Restart containerd

sudo systemctl restart containerd

systemctl enable containerd.service

Test if working correctly:

systemctl restart containerd

ctr images pull --snapshotter devmapper docker.io/library/hello-world:latest

ctr run --snapshotter devmapper docker.io/library/hello-world:latest test

$ ctr plugin ls

TYPE ID PLATFORMS STATUS

io.containerd.content.v1 content - ok

io.containerd.snapshotter.v1 aufs linux/amd64 error

io.containerd.snapshotter.v1 devmapper linux/amd64 ok

io.containerd.snapshotter.v1 native linux/amd64 ok

io.containerd.snapshotter.v1 overlayfs linux/amd64 ok

io.containerd.snapshotter.v1 zfs linux/amd64 error

io.containerd.metadata.v1 bolt - ok

io.containerd.differ.v1 walking linux/amd64 ok

io.containerd.gc.v1 scheduler - ok

io.containerd.service.v1 introspection-service - ok

io.containerd.service.v1 containers-service - ok

io.containerd.service.v1 content-service - ok

io.containerd.service.v1 diff-service - ok

io.containerd.service.v1 images-service - ok

io.containerd.service.v1 leases-service - ok

io.containerd.service.v1 namespaces-service - ok

io.containerd.service.v1 snapshots-service - ok

io.containerd.runtime.v1 linux linux/amd64 ok

io.containerd.runtime.v2 task linux/amd64 ok

io.containerd.monitor.v1 cgroups linux/amd64 ok

io.containerd.service.v1 tasks-service - ok

io.containerd.internal.v1 restart - ok

io.containerd.grpc.v1 containers - ok

io.containerd.grpc.v1 content - ok

io.containerd.grpc.v1 diff - ok

io.containerd.grpc.v1 events - ok

io.containerd.grpc.v1 healthcheck - ok

io.containerd.grpc.v1 images - ok

io.containerd.grpc.v1 leases - ok

io.containerd.grpc.v1 namespaces - ok

io.containerd.internal.v1 opt - ok

io.containerd.grpc.v1 snapshots - ok

io.containerd.grpc.v1 tasks - ok

io.containerd.grpc.v1 version - ok

io.containerd.grpc.v1 cri linux/amd64 ok

Install nerdctl

I like to use nerdctl instad of ctr or crictl cli so I will install it.

wget https://github.com/containerd/nerdctl/releases/download/v0.11.0/nerdctl-0.11.0-linux-amd64.tar.gz

tar -xzf nerdctl-0.11.0-linux-amd64.tar.gz

mv nerdctl /usr/local/bin

nerdctl ps

Install tools

yum install git -y

sudo git clone https://github.com/ahmetb/kubectx /opt/kubectx

sudo ln -s /opt/kubectx/kubectx /usr/local/sbin/kubectx

sudo ln -s /opt/kubectx/kubens /usr/local/sbin/kubens

Install Kubernetes

Configure Kernel parameters for Kubernetes.

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

kvm-intel

vhost_vsock

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system

Disable swap for Kubernetes.

free -h

swapoff -a

swapoff -a

sed -i.bak -r 's/(.+ swap .+)/#\1/' /etc/fstab

free -h

The I will add the kubernetes repo and Install the packages.

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

dnf install kubelet kubeadm kubectl -y

Start Kubernetes with containerd engine.

export IP=172.17.13.10

dnf install -y iproute-tc

systemctl enable kubelet.service

echo "KUBELET_EXTRA_ARGS=--cgroup-driver=systemd" | tee /etc/sysconfig/kubelet

kubeadm config images pull --cri-socket=unix:///run/containerd/containerd.sock

kubeadm init --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=$IP --cri-socket=unix:///run/containerd/containerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get no

nerdctl -n k8s.io ps

crictl ps

kubectl taint nodes $(hostname) node-role.kubernetes.io/master:NoSchedule-

Inincialize network

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl aplly -f kube-flannel.yml

OR

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

nano custom-resources.yaml

...

cidr: 10.244.0.0/16

...

kubectl apply -f custom-resources.yaml

Install Kata container engine

If all the Nodes are ready deploy a Daemonsets to build Kata containers and firecracker wit kata-deploy:

$ kubectl get no

NAME STATUS ROLES AGE VERSION

alma8 Ready control-plane,master 2m31s v1.22.1

# Installing the latest image

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-rbac/base/kata-rbac.yaml

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-deploy/base/kata-deploy.yaml

# OR

# Installing the stable image

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-rbac/base/kata-rbac.yaml

kubectl apply -f https://raw.githubusercontent.com/kata-containers/kata-containers/main/tools/packaging/kata-deploy/kata-deploy/base/kata-deploy-stable.yaml

Verify the pod:

kubens kube-system

$ kubectl get DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kata-deploy 1 1 1 1 1 <none> 3m43s

kube-proxy 1 1 1 1 1 kubernetes.io/os=linux 10m

$ kubectl get po kata-deploy-5zwmq

NAME READY STATUS RESTARTS AGE

kata-deploy-5zwmq 1/1 Running 0 4m24

kubectl logs kata-deploy-5zwmq

copying kata artifacts onto host

#!/bin/bash

KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-fc.toml /opt/kata/bin/containerd-shim-kata-v2 "$@"

#!/bin/bash

KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-qemu.toml /opt/kata/bin/containerd-shim-kata-v2 "$@"

#!/bin/bash

KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-clh.toml /opt/kata/bin/containerd-shim-kata-v2 "$@"

Add Kata Containers as a supported runtime for containerd

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata]

runtime_type = "io.containerd.kata.v2"

privileged_without_host_devices = true

pod_annotations = ["io.katacontainers.*"]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata.options]

ConfigPath = "/opt/kata/share/defaults/kata-containers/configuration.toml"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-fc]

runtime_type = "io.containerd.kata-fc.v2"

privileged_without_host_devices = true

pod_annotations = ["io.katacontainers.*"]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-fc.options]

ConfigPath = "/opt/kata/share/defaults/kata-containers/configuration-fc.toml"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-qemu]

runtime_type = "io.containerd.kata-qemu.v2"

privileged_without_host_devices = true

pod_annotations = ["io.katacontainers.*"]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-qemu.options]

ConfigPath = "/opt/kata/share/defaults/kata-containers/configuration-qemu.toml"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-clh]

runtime_type = "io.containerd.kata-clh.v2"

privileged_without_host_devices = true

pod_annotations = ["io.katacontainers.*"]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-clh.options]

ConfigPath = "/opt/kata/share/defaults/kata-containers/configuration-clh.toml"

node/alma8 labeled

$ ll /opt/kata/bin/

total 157532

-rwxr-xr-x. 1 root root 4045032 Jul 19 06:10 cloud-hypervisor

-rwxr-xr-x. 1 root root 42252997 Jul 19 06:12 containerd-shim-kata-v2

-rwxr-xr-x. 1 root root 3290472 Jul 19 06:14 firecracker

-rwxr-xr-x. 1 root root 2589888 Jul 19 06:14 jailer

-rwxr-xr-x. 1 root root 16686 Jul 19 06:12 kata-collect-data.sh

-rwxr-xr-x. 1 root root 37429099 Jul 19 06:12 kata-monitor

-rwxr-xr-x. 1 root root 54149384 Jul 19 06:12 kata-runtime

-rwxr-xr-x. 1 root root 17521656 Jul 19 06:18 qemu-system-x86_64

Restart containerd to enable the new config:

systemctl restart containerd

Now I can start a Kata container from commadnline.

sudo ctr image pull docker.io/library/hello-world:latest

sudo ctr run --runtime io.containerd.run.kata-qemu.v2 -t --rm docker.io/library/hello-world:latest hello

sudo ctr run --runtime io.containerd.run.kata-clh.v2 -t --rm docker.io/library/hello-world:latest hello

ctr run --snapshotter devmapper --runtime io.containerd.run.kata-fc.v2 -t docker.io/library/hello-world:latest hello

Start Deployment

First I create a RuntimeClass for kata-fc then start a pod with this RuntimeClass.

kubens default

kubectl apply -f - <<EOF

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: kata-fc

handler: kata-fc

EOF

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

labels:

app: untrusted

name: www-kata-fc

spec:

runtimeClassName: kata-fc

containers:

- image: nginx:1.18

name: www

ports:

- containerPort: 80

EOF

kubectl apply -f - <<EOF

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: kata-qemu

handler: kata-qemu

EOF

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

labels:

app: untrusted

name: www-kata-qemu

spec:

runtimeClassName: kata-qemu

containers:

- image: nginx:1.18

name: www

ports:

- containerPort: 80

EOF

kubectl apply -f - <<EOF

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: kata-clh

handler: kata-clh

EOF

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

labels:

app: untrusted

name: www-kata-clh

spec:

runtimeClassName: kata-clh

containers:

- image: nginx:1.18

name: www

ports:

- containerPort: 80

EOF

$ kubectl get po

NAME READY STATUS RESTARTS AGE

www-kata-clh 1/1 Running 0 59s

www-kata-fc 1/1 Running 0 12s

www-kata-qemu 1/1 Running 0 69s